From The Conversation.

Facebook announced last week it would discontinue the partner programs that allow advertisers to use third-party data from companies such as Acxiom, Experian and Quantium to target users.

Graham Mudd, Facebook’s product marketing director, said in a statement:

Graham Mudd, Facebook’s product marketing director, said in a statement:

We want to let advertisers know that we will be shutting down Partner Categories. This product enables third party data providers to offer their targeting directly on Facebook. While this is common industry practice, we believe this step, winding down over the next six months, will help improve people’s privacy on Facebook.

Few people seemed to notice, and that’s hardly surprising. These data brokers operate largely in the background.

The invisible industry worth billions

In 2014, one researcher described the entire industry as “largely invisible”. That’s no mean feat, given how much money is being made. Personal data has been dubbed the “new oil”, and data brokers are very efficient miners. In the 2018 fiscal year, Acxiom expects annual revenue of approximately US$945 million.

The data broker business model involves accumulating information about internet users (and non-users) and then selling it. As such, data brokers have highly detailed profiles on billions of individuals, comprising age, race, sex, weight, height, marital status, education level, politics, shopping habits, health issues, holiday plans, and more.

These profiles come not just from data you’ve shared, but from data shared by others, and from data that’s been inferred. In its 2014 report into the industry, the US Federal Trade Commission (FTC) showed how a single data broker had 3,000 “data segments” for nearly every US consumer.

Based on the interests inferred from this data, consumers are then placed in categories such as “dog owner” or “winter activity enthusiast”. However, some categories are potentially sensitive, including “expectant parent”, “diabetes interest” and “cholesterol focus”, or involve ethnicity, income and age. The FTC’s Jon Leibowitz described data brokers as the “unseen cyberazzi who collect information on all of us”.

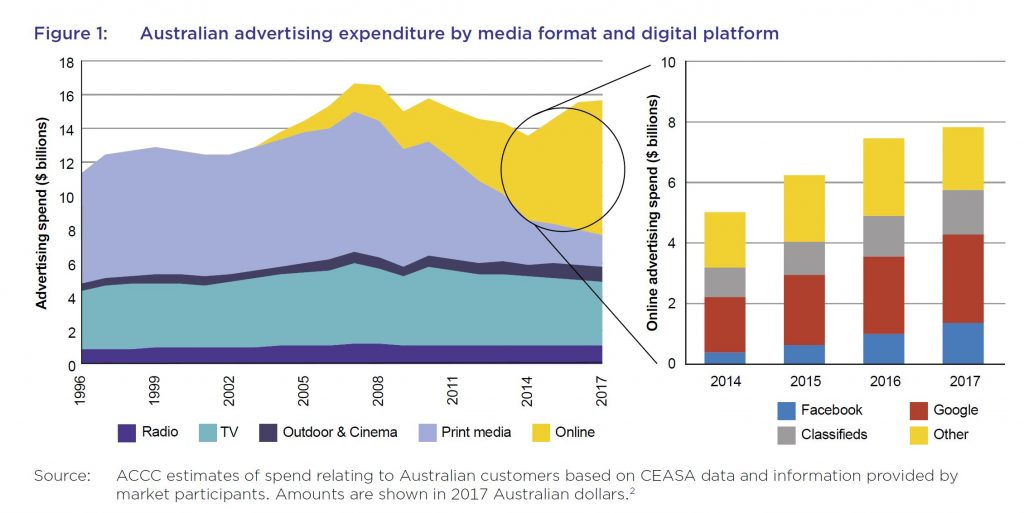

In Australia, Facebook launched the Partner Categories program in 2015. Its aim was to “reach people based on what they do and buy offline”. This includes demographic and behavioural data, such as purchase history and home ownership status, which might come from public records, loyalty card programs or surveys. In other words, Partner Categories enables advertisers to use data brokers to reach specific audiences. This is particularly useful for companies that don’t have their own customer databases.

A growing concern

Third party access to personal data is causing increasing concern. This week, Grindr was shown to be revealing its users’ HIV status to third parties. Such news is unsettling, as if there are corporate eavesdroppers on even our most intimate online engagements.

The recent Cambridge Analytica furore stemmed from third parties. Indeed, apps created by third parties have proved particularly problematic for Facebook. From 2007 to 2014, Facebook encouraged external developers to create apps for users to add content, play games, share photos, and so on.

Facebook then gave the app developers wide-ranging access to user data, and to users’ friends’ data. The data shared might include details of schooling, favourite books and movies, or political and religious affiliations.

As one group of privacy researchers noted in 2011, this process, “which nearly invisibly shares not just a user’s, but a user’s friends’ information with third parties, clearly violates standard norms of information flow”.

With the Partner Categories program, the buying, selling and aggregation of user data may be largely hidden, but is it unethical? The fact that Facebook has moved to stop the arrangement suggests that it might be.

More transparency and more respect for users

To date, there has been insufficient transparency, insufficient fairness and insufficient respect for user consent. This applies to Facebook, but also to app developers, and to Acxiom, Experian, Quantium and other data brokers.

Users might have clicked “agree” to terms and conditions that contained a clause ostensibly authorising such sharing of data. However, it’s hard to construe this type of consent as morally justifying.

In Australia, new laws are needed. Data flows in complex and unpredictable ways online, and legislation ought to provide, under threat of significant penalties, that companies (and others) must abide by reasonable principles of fairness and transparency when they deal with personal information. Further, such legislation can help specify what sort of consent is required, and in which contexts. Currently, the Privacy Act doesn’t go far enough, and is too rarely invoked.

In its 2014 report, the US Federal Trade Commission called for laws that enabled consumers to learn about the existence and activities of data brokers. That should be a starting point for Australia too: consumers ought to have reasonable access to information held by these entities.

Time to regulate

Having resisted regulation since 2004, Mark Zuckerberg has finally conceded that Facebook should be regulated – and advocated for laws mandating transparency for online advertising.

Historically, Facebook has made a point of dedicating itself to openness, but Facebook itself has often operated with a distinct lack of openness and transparency. Data brokers have been even worse.

Facebook’s motto used to be “Move fast and break things”. Now Facebook, data brokers and other third parties need to work with lawmakers to move fast and fix things.

Author: Sacha Molitorisz, Postdoctoral Research Fellow, Centre for Media Transition, Faculty of Law, University of Technology Sydney